Concurrency in Computer Science

Introduction to Concurrency

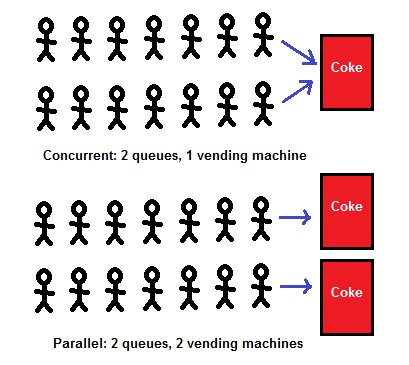

Concurrency is a fundamental concept in computer science that allows multiple tasks or processes to execute simultaneously or appear to do so. It is crucial for improving the efficiency and responsiveness of applications, particularly in systems that require high performance and resource utilization.

Importance of Concurrency

- Improved Resource Utilization: By allowing multiple processes to run at the same time, systems can make better use of CPU and I/O resources, leading to increased throughput.

- Responsiveness: In user-facing applications, concurrency allows tasks to run in the background while the user interacts with the application, improving user experience.

- Parallelism: Concurrency is a stepping stone to parallelism, where tasks are not only concurrent but also executed simultaneously on multiple processors or cores.

Models of Concurrency

There are several models for implementing concurrency in computer systems:

1. Thread-Based Concurrency

- Threads are the smallest units of processing that can be scheduled by an operating system. Multiple threads can exist within the same process and share resources.

- Advantages: Light-weight, efficient context switching, and sharing memory.

- Challenges: Thread synchronization and the potential for race conditions.

2. Process-Based Concurrency

- Processes are independent units of execution with their own memory space. Each process runs in its own environment and does not share memory with other processes.

- Advantages: Greater isolation and stability, reduced risk of data corruption.

- Challenges: Higher overhead for context switching and inter-process communication (IPC).

3. Asynchronous Programming

- Asynchronous programming allows tasks to run in the background without blocking the execution flow of the program. This is often achieved using callbacks, promises, or async/await syntax.

- Advantages: Efficient handling of I/O-bound tasks.

- Challenges: Complexity in managing callbacks and error handling.

4. Actor Model

- The Actor Model abstracts concurrency by treating "actors" as independent entities that communicate through message passing.

- Advantages: Simplifies reasoning about concurrent systems and avoids shared state issues.

- Challenges: Message passing can introduce latency and complexity in message handling.

Challenges in Concurrency

While concurrency offers many benefits, it also introduces several challenges:

1. Race Conditions

- Occur when multiple processes or threads access shared data simultaneously and try to change it, leading to unpredictable results.

2. Deadlocks

- A situation where two or more processes are unable to proceed because each is waiting for the other to release resources.

3. Starvation

- A condition where a process is perpetually denied the resources it needs to proceed, often due to resource allocation policies.

4. Complexity of Design

- Designing concurrent systems can be complex, requiring careful consideration of synchronization, communication, and error handling.

Conclusion

Concurrency is an essential concept in computer science that enhances the efficiency and responsiveness of applications. Understanding the various models and challenges associated with concurrency is crucial for designing robust and scalable systems. As technology continues to evolve, the need for effective concurrent programming techniques will only grow, making it a vital area of study for software engineers and computer scientists alike.