Understanding Synchronous Operations in Software Development

What Does Synchronous Mean?

In the context of non-blocking and concurrent programming, synchronous refers to operations that occur sequentially. This means that tasks or operations are executed one at a time, with each task waiting for the previous one to complete before starting.

Key Characteristics of Synchronous Operations

- Blocking: A synchronous task will hold up the entire process until it finishes, and other tasks must wait for it to complete.

- Sequential Execution: Tasks run in the order they are initiated, without overlapping.

- Predictable Flow: The program flow is easier to understand since each operation finishes before the next one begins.

Example of Synchronous Operations

- Synchronous I/O: When reading from a file or a network resource, the program waits for the operation to complete before continuing to the next line of code.

Drawbacks of Synchronous Design

- Reduced Efficiency: Since other tasks must wait for the current one to finish, the system may be less efficient and slower, especially when handling I/O or network requests.

- Blocking: A slow operation can delay the entire process, potentially leading to bottlenecks in high-performance systems.

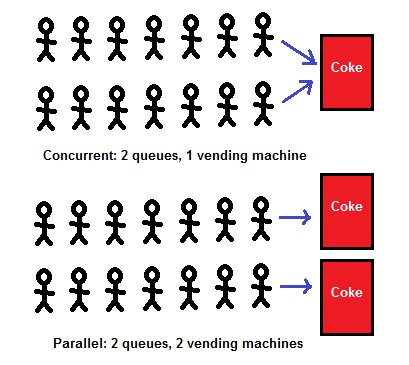

In contrast, asynchronous operations allow tasks to start and finish without waiting for others, which enhances concurrency and overall system efficiency.